3 papers accepted by NeurIPS 2024

We have 3 papers accepted by NeurIPS 2024. All these papers contribute to the realm of continual learning and address the online continual learning, generalized class-incremental learning and continual learning of vision-language models. Detailed information is listed below.

Paper 1: F-OAL: Forward-only Online Analytic Learning with Fast Training and Low Memory Footprint in Class Incremental Learning

Authors: Huiping Zhuang, Yuchen Liu, Run He, Kai Tong, Ziqian Zeng, Cen Chen, Yi Wang, Lap-Pui Chau

Abstract:

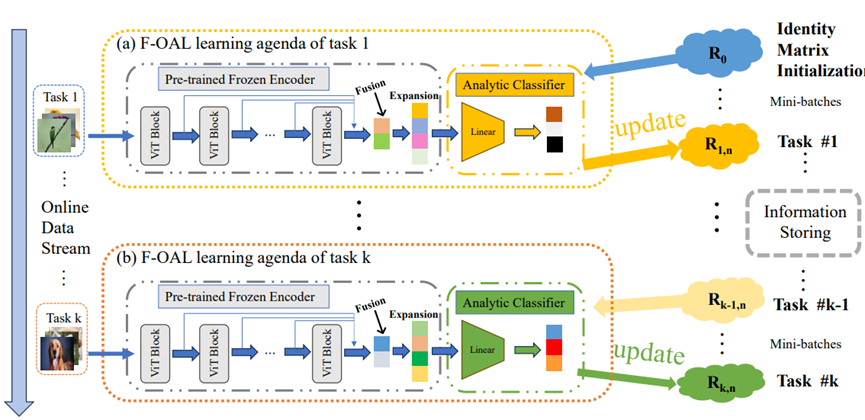

Online Class Incremental Learning (OCIL) aims to train models incrementally, where data arrive in mini-batches, and previous data are not accessible. A major challenge in OCIL is Catastrophic Forgetting, i.e., the loss of previously learned knowledge. Among existing baselines, replay-based methods show competitive results but requires extra memory for storing exemplars, while exemplar-free (i.e., data need not be stored for replay in production) methods are resource friendly but often lack accuracy. In this paper, we propose an exemplar-free approach—Forward-only Online Analytic Learning (F-OAL). Unlike traditional methods, F-OAL does not rely on back-propagation and is forward-only, significantly reducing memory usage and computational time. Cooperating with a pre-trained frozen encoder with Feature Fusion, F-OAL only needs to update a linear classifier by recursive least square. This approach simultaneously achieves high accuracy and low resource consumption. Extensive experiments on bench mark datasets demonstrate F-OAL’s robust performance in OCIL scenarios.

Paradigm of proposed F-OAL

Code: https://github.com/liuyuchen-cz/F-OAL

Paper 2: GACL: Exemplar-Free Generalized Analytic Continual Learning

Authors: Huiping Zhuang, Yizhu Chen, Di Fang, Run He, Kai Tong, Hongxin Wei, Ziqian Zeng, Cen Chen

Abstract:

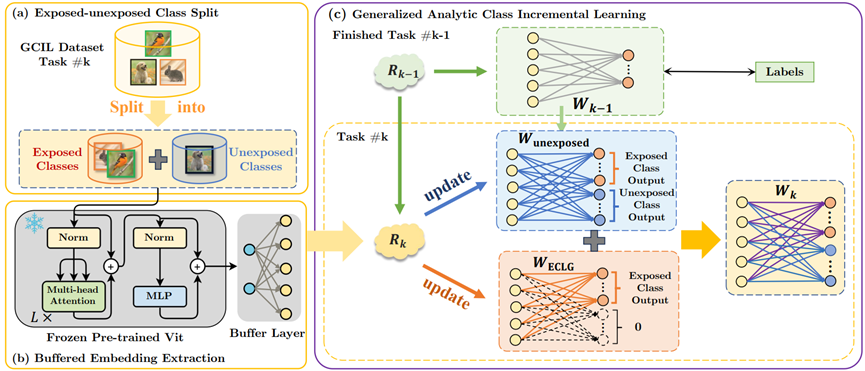

Class incremental learning (CIL) trains a network on sequential tasks with separated categories in each task but suffers from catastrophic forgetting, where models quickly lose previously learned knowledge when acquiring new tasks. The generalized CIL (GCIL) aims to address the CIL problem in a more real-world scenario, where incoming data have mixed data categories and unknown sample size distribution. Existing attempts for the GCIL either have poor performance or invade data privacy by saving exemplars. In this paper, we propose a new exemplar-free GCIL technique named generalized analytic continual learning (GACL). The GACL adopts analytic learning (a gradient-free training technique) and delivers an analytical (i.e., closed-form) solution to the GCIL scenario. This solution is derived via decomposing the incoming data into exposed and unexposed classes, thereby attaining a weight-invariant property, a rare yet valuable property supporting an equivalence between incremental learning and its joint training. Such an equivalence is crucial in GCIL settings as data distributions among different tasks no longer pose challenges to adopting our GACL. Theoretically, this equivalence property is validated through matrix analysis tools. Empirically, we conduct extensive experiments where, compared with existing GCIL methods, our GACL exhibits a consistently leading performance across various datasets and GCIL settings.

An overview of our proposed GACL

Code: https://github.com/CHEN-YIZHU/GACL

Paper 3: Advancing Cross-domain Discriminability in Continual Learning of Vision-Language Models

Authors: Yicheng Xu, Yuxin Chen, Jiahao Nie, Yusong Wang, Huiping Zhuang, Manabu Okumura

Abstract:

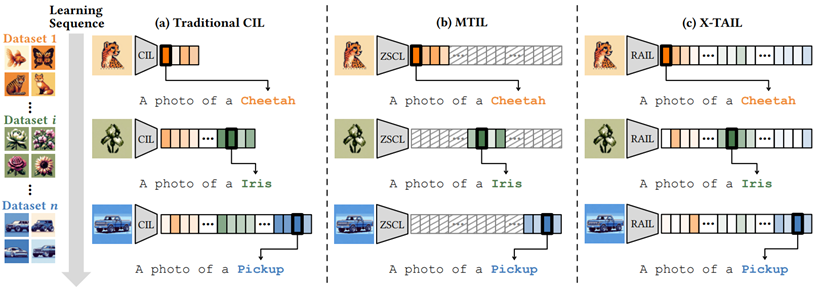

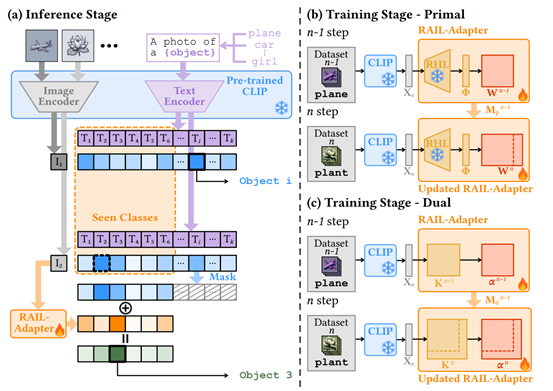

Continual learning (CL) with Vision-Language Models (VLMs) has overcome the constraints of traditional CL, which only focuses on previously encountered classes. During the CL of VLMs, we need not only to prevent the catastrophic forgetting on incrementally learned knowledge but also to preserve the zero-shot ability of VLMs. However, existing methods require additional reference datasets to maintain such zero-shot ability and rely on domain-identity hints to classify images across different domains. In this study, we propose Regression-based Analytic Incremental Learning (RAIL), which utilizes a recursive ridge regression-based adapter to learn from a sequence of domains in a non-forgetting manner and decouple the cross-domain correlations by projecting features to a higher-dimensional space. Cooperating with a training-free fusion module, RAIL absolutely preserves the VLM’s zero-shot ability on unseen domains without any reference data. Additionally, we introduce Cross-domain Task-Agnostic Incremental Learning (X-TAIL) setting. In this setting, a CL learner is required to incrementally learn from multiple domains and classify test images from both seen and unseen domains without any domain-identity hint. We theoretically prove RAIL’s absolute memorization on incrementally learned domains. Experiment results affirm RAIL’s state-of-the-art performance in both X-TAIL and existing Multi-domain Task-Incremental Learning settings.

Comparison of different CL settings in vision-language models

Overview of proposed RAIL

Paper: https://openreview.net/forum?id=boGxvYWZEq

Code: https://github.com/linghan1997/Regression-based-Analytic-Incremental-Learning